Life imitates algorithms

2023 is already shaping up to be Optimal

Meta’s AI division is the gift that keeps on giving, at least as far as tremendous content for this newsletter is concerned. Aficionados will recall Meta’s disastrous rollout of a deeply flawed chatbot/Large Language Model, followed by the disastrous spectacle of its chief AI scientist commenting “I really don’t care, do you?” in response to the bot’s abject and potentially life-endangering failures. I paraphrase, but barely.

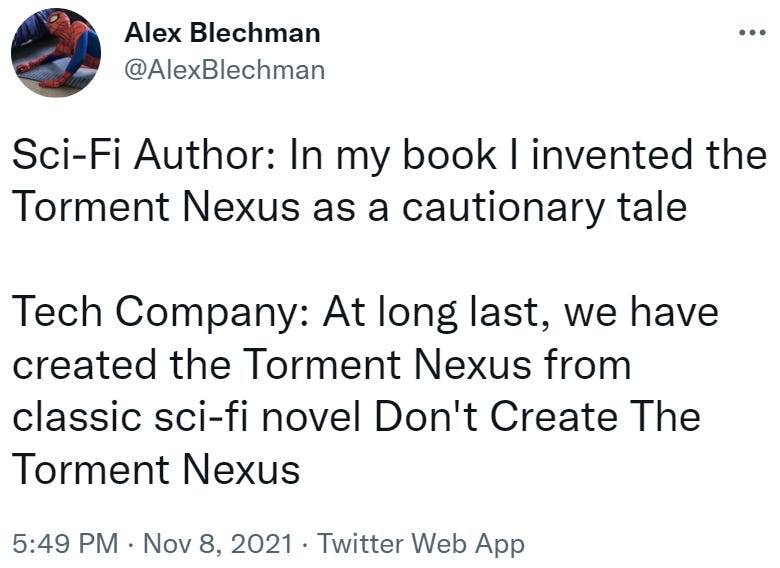

Meta closed out 2022 with a shout-out to my novel, Optimal. Probably unintentional, but you never know. Fun fact: Meta inspired the infamous Torment Nexus meme.

In the waning days of 2022, Meta AI put out a paper titled “OPT-IML: Scaling Language Model Instruction Meta Learning through the Lens of Generalization.” While less egregious than the chatbot, it’s definitely relevant to the finely tuned algorithmic feedback loops that power the Optimalverse. When writing the backstory for Optimal, I toyed with several too-clever-by-half acronyms for the technical leaps that lead our present to become its future, but I decided all my ideas were too hacky. Not a problem for the company that named its LLM “Galactica” after the Foundation trilogy (or more aptly, the Hitchhiker’s Guide to the Galaxy).

While I’d love to tell you that OPT-IML is the closest thing to Optimal in the real world at the moment, alas, I cannot. Minor-ish spoilers follow, for those who have not read the book.

OK? OK.

The backstory of Optimal includes some discussion of machines writing code. Not the main theme of the book at all, but a not-unimportant development in the history of the Optimalverse, which paves the way for other more-spoilery developments. Optimal’s primary theme is centered on how humans respond to technology rather than the technology itself, which is why we never arrive at a Skynet or Hal-9000 moment. But the idea of “code writing code” allowed me to fast-forward through what would have been a lot of otherwise-tedious backstory macroeconomics.

Turns out, “code writing code” is coming up on us much faster than even I would have guessed. The current leader in the field, ChatGPT, has inspired a torrent of news coverage and analysis arguing over whether it will or will not replace human coders. What’s fairly clear is that generative AI can write code, whether or not it’s good, and it will probably get better as time passes. Computer code is a highly structured form of language, and it lends itself to a predictive-text approach, although it still needs a lot of human baby-sitting.

I asked ChatGPT how it would solve the key data analysis question that leads to Optimal’s (arguably) biggest spoiler, and good news: It didn’t give the answer that the algorithm in Optimal came up with. Not yet, anyway.

The rise of generative text includes other, more insidious possibilities. MIT’s Technology Review recently hit on the biggest one, an issue that is relevant to the Optimalverse, although I didn’t explicitly explore it in the book:

Large language models are trained on data sets that are built by scraping the internet for text, including all the toxic, silly, false, malicious things humans have written online. The finished AI models regurgitate these falsehoods as fact, and their output is spread everywhere online. Tech companies scrape the internet again, scooping up AI-written text that they use to train bigger, more convincing models, which humans can use to generate even more nonsense before it is scraped again and again, ad nauseam.

This is just a mind-blowing prospect, but one that is all too real, and one that definitely exists in the Optimalverse. Of course the technologists have a solution: Train the models to recognize AI-generated text. Since we can’t train a perfect spam filter after all these years, color me skeptical about this solution.

Optimal 2.0, writing itself daily.