Mister Roboto

My heart is human, my blood is boiling, my brain I.B.M.

An early concept in artificial intelligence is the Turing Test, which has a long and fascinating scientific history that I am going to ignore for the moment. Instead, let’s talk about it as a lingering cultural artifact. The basic idea is this: It’s too hard to figure out if sophisticated computers can think, so a better measure of artificial intelligence is whether its outputs are indistinguishable from those of a human. If the computer can convince you it thinks, then for all intents and purposes, it thinks.

At the time it was developed (1950), this was a smart and interesting take. In 2022, it’s outlived its usefulness. Unfortunately, no one told the A.I. guys. Some of them, anyway. There’s a vigorous, if not robust, debate about whether the large language models (LLMs) that produce generative text like ChatGPT experience anything like consciousness. I say the debate is not robust because on one side are a host of experts carefully explaining that predictive text is just predictive text, and on the other side are a bunch of dudes saying “but it’s spooky.”

So what does all this have to do with the Turing Test? It seems to me that most of the generative text business is predicated on passing it, at the expense of things like accuracy and ethics. These products are designed first to pass as human, and functionality comes second, if at all. Or in the words of Meta’s AI product lead Yann LeCun, “[M]isinformation is harmful only if it's widely distributed, read, and believed. LLM text generation doesn't make this any easier.” In an extensive series of bitchy tweets, LeCun has made the case that the product isn’t designed for accuracy or truth, and that he sees no problem with that, even though his “Galactica” was named after an encylopedia. Well, a fictional encyclopedia. I can think of a more apt metaphor.

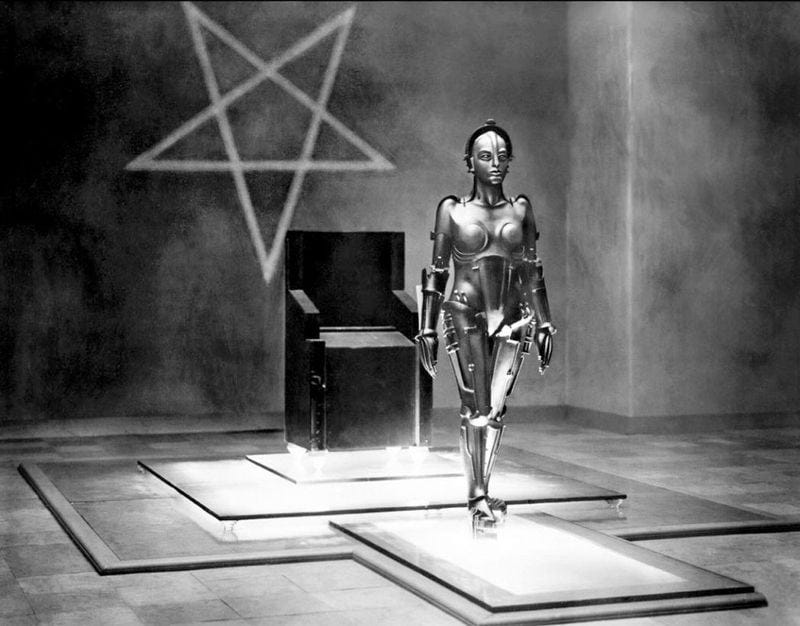

The goal of a product like Galactica, which was rolled out with all the carelessness and hubris of Tom and Daisy Buchanan, is to persuade users that something intelligent lies on the other side of the screen. The goal of ChatGPT, which was rolled out with considerably more thought and care, is more or less the same. In other words, the first measure of success for a generative A.I. app is whether it can persuade you to accept an untrue premise.

It *should* be obvious why it's problematic to create a piece of software that excels at persuasion without concern for accuracy, honesty or ethics. But apparently it's not.

As long as A.I. is created first for verisimilitude, we’re going to have this problem, an arms race to see who can develop the most convincing generative liar. WHAT COULD POSSIBLY GO WRONG?

To its credit, ChatGPT will tell you the truth about itself when asked. Unfortunately, too many people are willing to rush right past its statement of identity.

That "as a large language model" chatGPT kicked out for you at the end is boilerplate. There's a veneer of boilerplate responses on top of the underlying engine, and they patch that veneer to cover a new situation where the underlying engine does something problematic. But that doesn't fix the underlying engine. And it's not hard to work around the veneer.