"Are you surprised..."

A tribute to proving things we already suspected to be true

One of the classic reply-guy routines (especially on Twitter, but now creeping into Bluesky) is the “Are you surprised” motif. It goes like this.

Post: “Horrified to learn what Anakin did to the younglings.”

Reply guy: “Are you seriously surprised that the dark side is evil? Come on.”

People are assholes (are you surprised?). But beyond the simple dickishness of the reply-guy there’s a more universal truth, which is that people generally aren’t interested in research that confirms what they already “know.”

The thing is: our intuitions often betray us. Things we suspect are true are not always true. And things that are true but seem obvious also benefit from scrutiny. Sometimes we need to know why they are true, how they play out, with what intensity, and how we can flip the script. With that in mind, here are a few recent papers that confirm things we might have guessed and importantly shed light on why, how and how much.

This study used MRI brain scans to reveal that exposure to hate speech produced diminished responses in the parts of the brain associated with empathy. This reminds me of the groundbreaking MRI work done on jihadist supporters by Nafees Hamid. For me, the advantage of this particular genre of research is less for what it may tell us about causes and more how it can explain and confirm or falsify what we otherwise believe. In extremism studies, lots of counterintuitive things turn out to be true. So while we might have guessed that hate speech reduces empathy, this kind of brain research lets us state it much more definitively.

Turnout Turnaround: Ethnic Minority Victories Mobilize White Voters

When minority people win elections in majority White districts, White voters were highly mobilized in subsequent elections. If you wanted to call this the “Barack Obama effect,” you could probably get away with it.

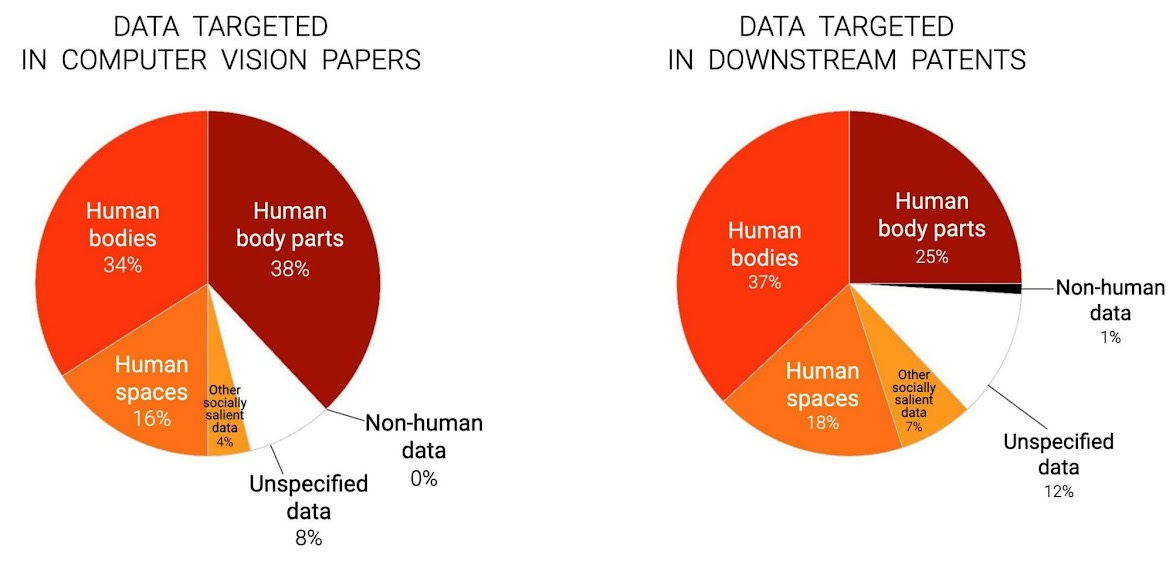

In an important confirmation of a claim that seems obvious to many people but is hotly contested by many others, researchers found that most research on computer vision leads directly to the patenting of new technologies for surveilling humans. By Pratyusha Ria Kalluri, William Agnew, Myra Cheng, Kentrell Owens, Luca Soldaini, and Abeba Birhane.

Large Language Models as a Substitute for Human Experts in Annotating Political Text

This one might be slightly less obvious to folks, but one key critique that I and many others have about LLM-based generative AI is that it’s not fit for use. There’s no logic behind using it as a search engine, or a therapist, or even a personal assistant. The work for Large Language Models are more obviously suited is, duh, language-based tasks, specifically many sorts of text classification and even simple analytical tasks. I will probably have more to say about this down the line a bit. In the meantime, this paper shows that automated annotation works pretty well! Kudos! Now let’s talk about bias in the dataset and intellectual property and…